Rumor: DeepSeek R2 Parameters Surge to 1.2 Trillion, 97.3% Cheaper! US Stocks Face Major Turbulence!

Technical Breakthrough

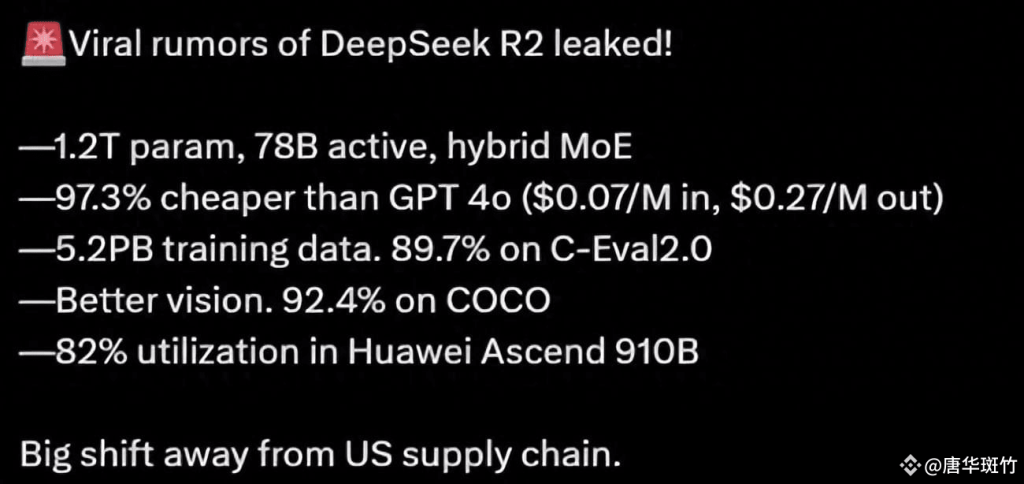

Recently, various sources have leaked information that DeepSeek is about to release its new generation large language model, DeepSeek R2. According to social media leaks, the key highlights are as follows:

– **Architecture Innovation**: Employs self-developed Hybrid MoE 3.0 architecture, with dynamically activated parameters reaching 1.2 trillion, while actual computational consumption is only 78 billion, significantly improving efficiency;

– **Domestically Produced Hardware**: Trained on Huawei Ascend 910B chip clusters, with computing power utilization rate reaching 82%, performance approaching 91% of NVIDIA A100 clusters;

– **Multimodal Leap**: Achieves 92.4% accuracy in COCO image segmentation tasks, surpassing CLIP models by 11.6 percentage points;

– **Vertical Domain Applications**: Medical diagnosis accuracy exceeds 98%, industrial quality inspection false detection rate reduced to 7.2 parts per 10 million, pushing practical technology to new heights.

It’s worth noting that three months ago, the release of DeepSeek R1 caused NVIDIA to lose $600 billion in market value in a single day. The R2’s “low cost + high performance” combination will undoubtedly deliver an even more powerful blow to American tech giants that rely on premium-priced chips.

DeepSeek R2’s parameter scale has reportedly reached an astonishing 1.2 trillion, nearly double the 671 billion parameters of its predecessor R1. This figure approaches the level of international top-tier models such as GPT-4 Turbo and Google’s Gemini 2.0 Pro. The surge in parameters indicates a significant enhancement in the model’s learning capabilities and ability to handle complex tasks.

DeepSeek R2 adopts a Mixture of Experts (MoE) architecture, a technology that distributes tasks among multiple “small expert” modules. Simply put, the model automatically selects the most suitable “expert” to handle different tasks, improving efficiency while reducing computational resource waste. According to leaks, R2’s dynamically activated parameters are 78 billion, with actual computational consumption being only 6.5% of the total parameters. This design allows the model to maintain high performance while significantly reducing operational costs.

In terms of training data, DeepSeek R2 uses a 5.2PB (1PB = 1 million GB) high-quality corpus covering finance, law, patents, and other domains. Through multi-stage semantic distillation technology, the model’s instruction-following accuracy has improved to 89.7%. This means it is more skilled at understanding complex human instructions, such as analyzing legal documents or generating financial reports.

Cost Reduction of 97.3%

DeepSeek R2’s biggest breakthrough remains its dramatic cost reduction. According to leaks, its unit inference cost is 97.3% lower compared to GPT-4. For example, generating a 5,000-word article using GPT-4 costs approximately $1.35, while DeepSeek R2 requires only $0.035.

The core reason for this cost reduction lies in hardware adaptation optimization. DeepSeek R2 is trained on Huawei Ascend 910B chip clusters, with chip utilization reaching 82%. In comparison, similar NVIDIA A100 clusters have an efficiency of 91%. This suggests that domestically produced chips in China have approached international leading levels in AI training and may potentially break dependency on NVIDIA.

Multimodal Capabilities

Another highlight of DeepSeek R2 is its enhanced multimodal capabilities. In the visual understanding module, it adopts a ViT-Transformer hybrid architecture, achieving 92.4% accuracy in object segmentation tasks on the COCO dataset, an improvement of 11.6 percentage points over traditional CLIP models. In simple terms, it can more precisely identify objects in images, such as distinguishing pedestrians, vehicles, and traffic signs in a street scene photo.

Additionally, R2 supports 8-bit quantization compression, reducing model size by 83% with less than 2% accuracy loss. This means high-performance AI could run locally on future smartphones and smart home devices without relying on cloud servers.

Global AI Competition

The DeepSeek R2 leak has already triggered intense reactions in capital markets. Due to its cost advantages and technological independence, it may pose a threat to American tech companies that rely on NVIDIA GPUs. Analysts predict that if R2’s performance is confirmed, NVIDIA’s stock price could face short-term fluctuations, while Chinese AI industry chain-related enterprises might experience a new round of growth.

This event also reflects the new landscape of global AI competition. DeepSeek R2 proves that breakthroughs can be achieved through architectural innovation and adaptation to domestically produced hardware. The utilization rate data (82%) of Huawei Ascend chips indicates that China’s computing power infrastructure has already gained international competitiveness.

Although the leaked information is exciting, some industry insiders point out contradictions in the information. Discussions on foreign websites show instances of unofficial Chinese channel information being translated and spread, further increasing uncertainty. DeepSeek has not yet confirmed the release date, but considering recent collective learning about artificial intelligence by Chinese leadership, the dual benefits of policy support and technological breakthroughs may accelerate R2’s launch.

From chips to algorithms, from data to applications, every link in China’s AI industry chain is accelerating toward independence. Huawei Ascend replacing NVIDIA, building vertical domain barriers with a 5.2PB Chinese corpus—behind these moves is a life-or-death race for technological discourse power in the next decade.