The Rise of Edge Generative AI: Revolutionizing Intelligence at the Edge

In the rapidly evolving landscape of artificial intelligence, a new paradigm is emerging that promises to reshape how we interact with technology in our daily lives. Edge Generative AI—the fusion of generative AI capabilities with edge computing—is bringing unprecedented intelligent processing power directly to consumer devices, revolutionizing everything from smartphones to vehicles, security systems, and beyond.

What is Edge Generative AI?

Edge Generative AI combines two powerful technological concepts: generative artificial intelligence and edge computing. While traditional AI systems often rely on remote cloud servers for processing, edge generative AI enables AI models to run directly on local devices—such as smartphones, cameras, cars, or IoT devices—near the data source.

This innovative approach allows generative AI capabilities, which can create new content like text, images, or code from prompts, to function efficiently on end-user devices without continuous cloud connectivity. By processing data locally, edge generative AI delivers faster responses, enhanced privacy protection, and the ability to function even without network access.

Cloud AI vs. Edge Generative AI: Understanding the Difference

Cloud-Based AI Processing

Cloud-based AI relies on remote server infrastructure with substantial computing power to handle complex tasks like large-scale model training or high-resolution image synthesis. While this approach offers flexible scalability for deployment from individual users to enterprise-level applications, it faces several challenges:

- Higher latency due to data transfer requirements

- Heavy dependence on network connectivity

- Privacy concerns related to sending raw data to cloud servers

- Increased bandwidth costs from transmitting large volumes of data

- Risk of data exposure to users

Edge-Based AI Processing

Edge generative AI addresses these challenges by deploying AI capabilities directly on local devices:

- Enhanced privacy protection since sensitive information remains on the local device

- Low latency with real-time responses, ideal for applications requiring immediate feedback

- Reduced bandwidth requirements through minimized data transmission

- Reliable operation even without network connectivity

- Improved system stability and reliability

For scenarios where real-time processing, privacy, or limited connectivity is crucial, edge generative AI offers significant advantages. As advanced AI chips and optimized models continue to evolve, edge generative AI is expanding into numerous applications that require privacy-sensitive and real-time processing.

How Edge Generative AI is Transforming Industries

Security and Surveillance

Edge generative AI is redefining video monitoring systems by enabling smart cameras to perform intelligent analysis locally, transmitting only essential information or event summaries rather than continuous video streams. This dramatically improves monitoring efficiency and privacy protection.

Users can now utilize natural language queries to efficiently retrieve needed video segments. For instance, if your car gets scratched, you can simply ask your car what happened, and the system will retrieve and analyze the relevant footage—representing a significant improvement in user interaction.

Automotive Applications

In vehicles, edge generative AI enhances both safety and personalization. It enables natural language communication between users and vehicles, adapting to driving conditions (external visibility, speed), answering questions about vehicle operation or surroundings, and responding to passenger or driver behaviors (such as detecting child abandonment, forgotten items, or driver fatigue).

Edge AI-powered vehicle assistants can also provide personalized recommendations for optimal routes or adjust in-cabin environments based on user preferences, creating a more comfortable and convenient travel experience.

Personal Devices and IoT

Edge generative AI is revolutionizing personal devices like smartphones, wearables, and IoT endpoints, making them both smarter and more privacy-conscious. AI assistants on phones can provide health management advice or voice translation services without internet connectivity, while photos can be processed and optimized directly on devices, eliminating the risk of data uploads to third-party servers.

Technical Requirements for Edge Generative AI

Transformer Architecture

The rapid development of generative AI is fundamentally powered by transformers—a relatively new neural network type introduced in a 2017 Google Brain paper. Transformers outperform established AI models like Recurrent Neural Networks (RNNs) in natural language processing and Convolutional Neural Networks (CNNs) in handling images, videos, and other multi-dimensional data.

The key architectural improvement in transformer models is their attention mechanism, which allows them to focus on specific words or pixels, leading to better inferences based on data. This enables transformers to learn contextual relationships between words in text strings more accurately than RNNs and express more complex relationships in images than CNNs.

Model Size and Memory Challenges

Generative AI models require massive parameter sets—the pre-trained weights or coefficients in neural networks that recognize patterns and create new ones. While a standard CNN algorithm like ResNet50 has 25 million parameters, ChatGPT requires 175 billion parameters, and GPT-4 needs 1.75 trillion.

For edge devices with limited memory resources, running such large models presents significant challenges. However, smaller adaptations of large language models (LLMs) are emerging that reduce resource requirements. For example, Llama-2 offers a 70 billion parameter model version but also creates smaller models with fewer parameters. The 7 billion parameter Llama-2, while still substantial, falls within the practical implementation range for embedded Neural Processing Units (NPUs).

Text-to-image generators like Stable Diffusion, with around 1 billion parameters, can run comfortably on NPUs. Edge devices are expected to run LLMs with up to 60-70 billion parameters, and MLCommons has added the 6 billion parameter GenAI model GPT-J to its MLPerf edge AI benchmark list.

Memory and Bandwidth Considerations

Generative AI algorithms require extensive data movement and significant computational complexity. After balancing these requirements, architects can determine whether a given architecture is computationally limited (insufficient multiplications to process available data) or memory-limited (insufficient memory/bandwidth to provide all multiplications needed for processing).

Text-to-image models have a better balance of compute and bandwidth requirements—processing 2D images requires more computation but fewer parameters (in the billions range). Large language models are more imbalanced, requiring less computation but massive data movement. Even smaller LLMs (6-7 billion parameters) are memory-limited.

Leading Companies in Edge Generative AI Chips

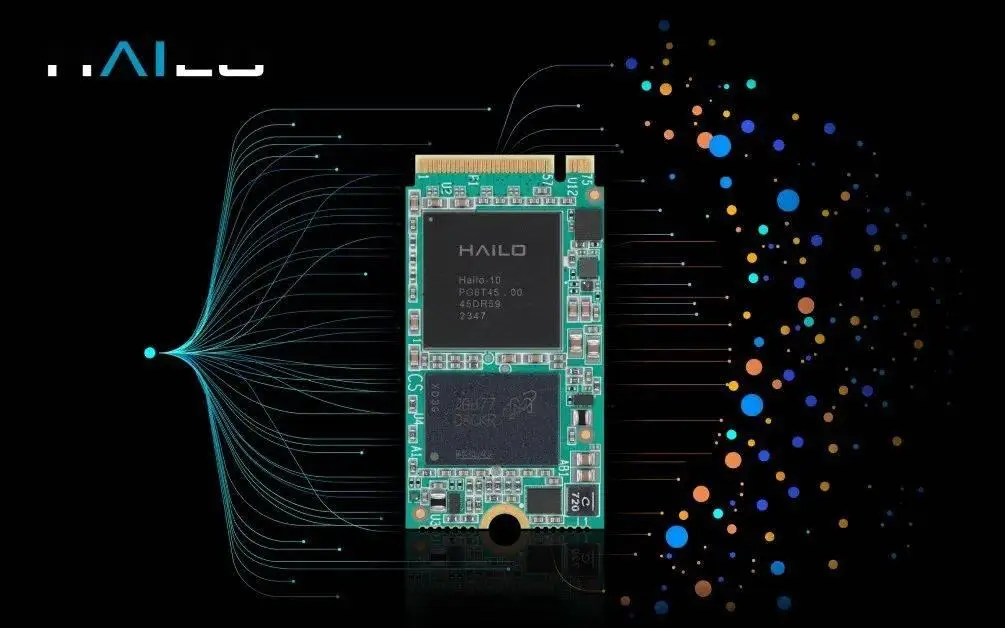

Hailo Technologies

Founded in 2017, Hailo is an Israeli chip manufacturer specializing in AI processors for edge devices. As a leader in edge generative AI chips, Hailo showcased its latest AI accelerator—Hailo-10—designed for edge devices to run large language models offline with low latency and high privacy protection.

Hailo’s edge generative AI can execute basic tasks and support complex generative tasks, expanding its application range. The company has introduced technology demonstrations designed for Advanced Driver Assistance Systems (ADAS), integrating camera sensors, LiDAR, and other advanced technologies to achieve pedestrian detection, 3D bird’s-eye perception, and automatic parking functions based on the powerful computing capabilities of the Hailo-8 accelerator.

Through its unique technological architecture and flexible ecosystem strategy, Hailo has successfully carved out a position in the competitive AI chip market. Its processors are designed for edge devices while meeting the demands of complex AI models, maintaining low power consumption and high cost-effectiveness.

Future Trends in Edge Generative AI

The future of edge generative AI points toward a deep integration of efficient hardware and compact AI models. As AI chip technology advances, even low-power devices like smart cameras, personal assistant robots, and computers can run powerful generative AI models, promoting wider AI computing applications.

The rise of decentralized AI ecosystems means future AI computing will be more distributed, with edge devices autonomously handling tasks and reducing dependence on central servers. Data collaboration between smart home devices is one example of this approach, which enhances data security and accelerates response times.

Industry integration and application scenario expansion are also significant trends. As edge generative AI capabilities strengthen, applications are extending from smart driving and security to healthcare, retail, industrial inspection, and more. For instance, rural clinics using local AI for disease diagnosis without remote server support greatly improves the accessibility and efficiency of medical services.

The emergence of edge generative AI marks a major shift in computing paradigms, transforming everything from intelligent surveillance and autonomous vehicles to smartphones, personal computers, and industrial IoT across multiple domains.