Advantech Launches GenAI Studio Edge AI Software Platform: Enabling Local Large Language Model Development and Advancing Edge AI Innovation

Accelerating AI Development to Address Industry Challenges

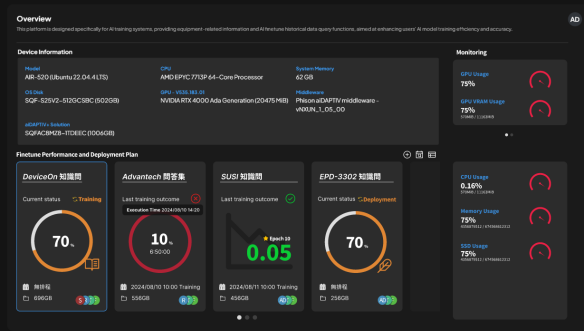

In early 2025, global AIoT platform and service provider Advantech announced the launch of a new software product — GenAI Studio, which is part of Advantech’s Edge AI SDK. This solution aims to meet the growing demand for cost-effective, locally-deployed large language model (LLM) solutions.

As part of Advantech’s Edge AI Software Development Kit (Edge AI SDK), GenAI Studio is dedicated to solving industry pain points, such as reducing the time factory operators wait for critical information and alleviating documentation workloads for healthcare professionals. Its no-code, cost-effective platform simplifies the adoption of large language models (LLMs), enabling businesses to rapidly and efficiently deploy AI solutions, thereby improving productivity and operational efficiency.

Comprehensive LLM Development Toolkit with Advanced Integration Capabilities

GenAI Studio is built on Advantech’s Edge AI SDK, featuring a versatile LLM platform with excellent local and cloud LLM integration capabilities. It supports various LLMs, including OpenAI, Gemini, Anthropic, and Ollama. Additionally, it introduces full-parameter fine-tuning functionality optimized for environments with limited GPU resources, achieving broader accessibility and performance improvements.

GenAI Studio provides a comprehensive set of tools for large language model (LLM) development:

- Integrated Fine-tuning and Inference Services: GenAI Studio combines fine-tuning and inference functions, maximizing hardware utilization and enabling more flexible and efficient resource allocation.

- Advanced GPU Resource Management and Task Scheduling: These capabilities allow users to optimize AI hardware performance and enhance the cost-effectiveness of high-value equipment.

With the rapid development of AI, the demand for easily accessible large language model solutions has become increasingly prominent, but many companies are hindered by limited resources. Advantech’s Edge AI Software Development Kit (Edge AI SDK) addresses this challenge by providing a set of tools that efficiently evaluate, develop, and deploy edge AI applications. For example, traditionally, over 30 GPUs with 48GB memory each are required to fine-tune a 70 billion parameter large language model, but with Advantech’s Edge AI SDK, only 4 GPUs are needed. This represents an 87% reduction in resource requirements, significantly lowering costs and making large language model solutions more accessible.

Empowering AI Innovation through Integration with Advantech’s AIR-520 Edge AI Server

To complement GenAI Studio, Advantech’s AIR-520 Edge AI Server provides a powerful hardware platform equipped with NVIDIA RTX GPU and Phison AI SSD. This integration delivers reliable, efficient computing power designed to meet the AI application needs of industries such as manufacturing, healthcare, and retail.

Advantech Edge AI SDK: Streamlining Workflows from Model Fine-tuning to Deployment

Advantech’s Edge AI SDK is a fully integrated platform designed for seamless edge AI development. With pre-configured hardware and optimized software, it provides a plug-and-play experience enabling cost-effective LLM customization, seamless toolkit compatibility, and easy management of large-scale edge deployments. Designed with reliability, scalability, and user-friendliness in mind, the Advantech Edge AI SDK simplifies the path to AI innovation. It now includes three core components:

- GenAI Studio: Facilitates the creation, evaluation, and integration of custom large language models locally in a cost-effective manner.

- Inference Suite: Enables quick optimization and evaluation of efficient AI runtimes compatible with embedded operating systems.

- Operations Platform: Provides efficient management of AI model and application updates in large-scale edge deployments, integrating MLOps to simplify operations.

Through its Edge AI SDK and the newly added GenAI Studio software module, Advantech has enhanced the ability of enterprises to develop and deploy AI solutions more efficiently and at lower costs.

Revolutionary Impact of Deepseek Models on Industrial Edge Computing

With the emergence of Deepseek large models, a disruptive impact on the entire industrial sector is anticipated, especially with its original dynamic pruning and quantization technology for edge deployment. Deepseek large models support low-power operation on edge devices (compatible with hardware as low as 5 TOPS), with inference speed increased by 3 times.

Advantech has selected multiple NVIDIA-based edge AI products and tested them with the current Deepseek-R1 series distilled models, ranging from 1.5B to 32B. Let’s look at the latest test data!

Performance Testing Across Various Edge Computing Platforms

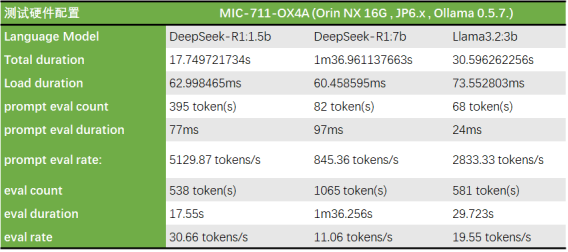

Micro Edge Inference Applications (100 TOPS Computing Power)

Recommended Models:

- ARM platform AI Box: ICAM-540 / MIC-711 / MIC-713

Supported Deepseek-R1 Distilled Model Versions:

- DeepSeek-R1-Distill-Qwen-1.5B

- DeepSeek-R1-Distill-Qwen-7B

- DeepSeek-R1-Distill-Llama-8B

Test Model: MIC-711

Super Mode was enabled on MIC-711-OX4A to run the same models, resulting in an overall performance improvement of nearly 25%. This demonstrates the significant performance enhancement of Jetson Super Mode in AI applications.

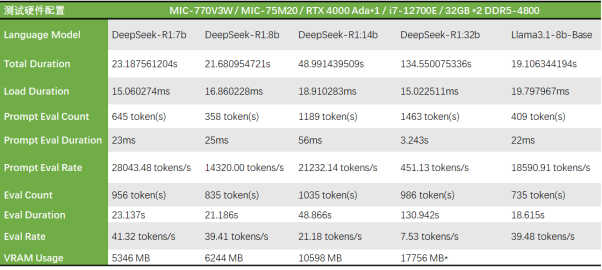

Edge Inference Applications (100-500 TOPS Computing Power)

Recommended Models:

- ARM platform Edge AI Box: MIC-732 / MIC-733 / 736

- X86 platform Edge AI Box: MIC-770V3

- Edge AI Server: HPC-6240 / HPC-7420 / SKY-602E3 (supporting 1-4 GPU cards)

Supported Deepseek-R1 Distilled Model Versions:

- DeepSeek-R1-Distill-Qwen-1.5B

- DeepSeek-R1-Distill-Qwen-7B

- DeepSeek-R1-Distill-Qwen-14B

- DeepSeek-R1-Distill-Llama-8B

Test Model: MIC-770V3

DeepSeek-R1:32b uses approximately 18GB VRAM under this system configuration and runs normally. However, when the same LLM model is tested on higher-end graphics cards, VRAM usage reaches 21GB. It is presumed that the model automatically adjusts parameters to reduce VRAM usage, but this affects performance.

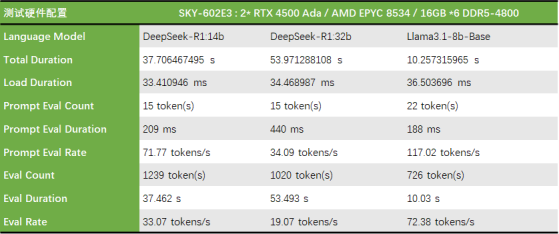

High-Performance Edge AI Servers (500-1000+ TOPS Computing Power)

Recommended Models:

- Edge AI Server: HPC-6240 / HPC-7420 / SKY-602E3 (supporting 1-4 GPU cards)

Supported Deepseek-R1 Distilled Model Versions:

- DeepSeek-R1-Distill-Qwen-14B

- DeepSeek-R1-Distill-Qwen-32B

- DeepSeek-R1-Distill-Llama-70B

Test Model: SKY-602E3

Reshaping Industrial Productivity with “Edge-Native Intelligence”

Advantech has created a full-stack edge AI hardware matrix, from micro-edge to edge cloud, including AI modules, AI cards, AI acceleration cards, AI embedded and industrial platforms, AI edge platforms, edge AI servers, and edge rack servers. Computing power ranges from 5 TOPS to 2000+ TOPS, allowing users to flexibly select models according to business scenarios and achieve the optimal balance between precision and efficiency. Advantech aims to work with partners to reshape industrial productivity through “edge-native intelligence”!