Human Hallucinations Are Far Worse Than AI’s

People easily nitpick AI products like DeepSeek, YuanBao, and ChatGPT, with AI hallucinations being the most criticized aspect. Simply put, this is when you ask AI a question, and it responds confidently with seemingly flawless answers, but some of the content is fabricated, leaving you skeptical.

To understand why AI hallucinations occur, we first need to understand that AI models primarily learn by identifying patterns in data for prediction. According to Google’s official explanation of AI hallucinations, there are two main causes:

The most fundamental issue is the quality and completeness of training data. If training data is incomplete, biased, or has other defects, AI models may learn incorrect patterns, leading to inaccurate predictions or hallucinations. For example, an AI model trained on medical image datasets to recognize cancer cells might incorrectly predict healthy tissue as cancerous if the dataset doesn’t include images of healthy tissue.

Additionally, AI models may struggle to accurately understand real-world knowledge, physical properties, or factual information. This lack of foundation can lead models to generate outputs that seem reasonable but are actually incorrect, irrelevant, or meaningless. This even includes fabricating links to web pages that never existed. For instance, an AI model used to generate news report summaries might include details not present in the original report or completely fabricate information.

Comparing AI and Human Accuracy

If AI models’ answer accuracy primarily depends on training data quality and diversity, but they cannot actively verify information authenticity, then theoretically, humans should be able to assess information accuracy through critical thinking and multi-source verification. But is this really the case?

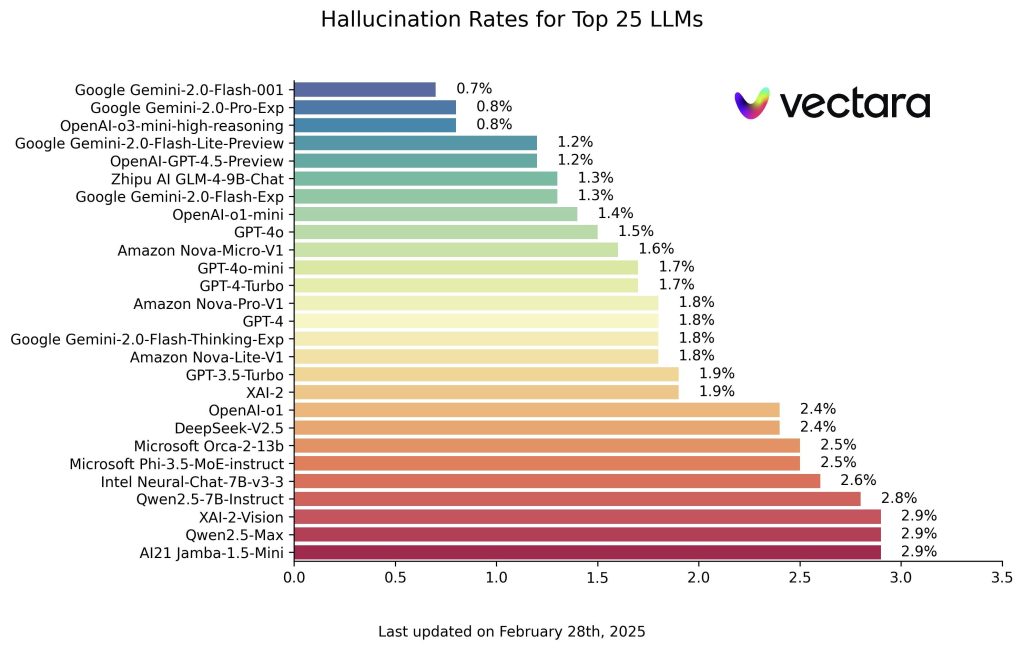

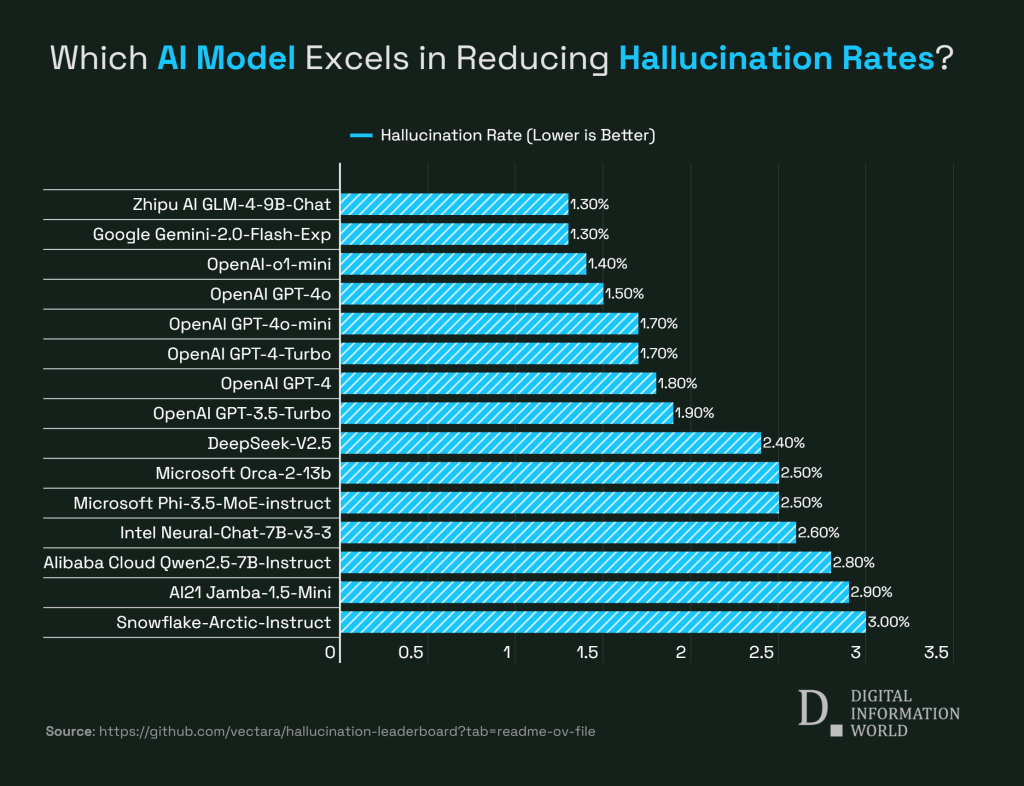

According to testing by the reputable Vectara in March 2025 on mainstream AI large language models, most mainstream models maintain relatively low hallucination rates. Gemini-2.0-Flash-001 ranks first with a low hallucination rate of 0.7%, demonstrating that it introduces almost no false information when processing documents. Additionally, Gemini-2.0-Pro-Exp and OpenAI’s o3-mini-high-reasoning models follow closely with hallucination rates of 0.8%. These hallucination rates are already far lower than those of human professional elites.

Current top large language models have surpassed human experts in knowledge-intensive tasks and structured scenarios (such as code generation and compliance reviews), though they still lag in open-ended creation (like literary composition) and reality experience-dependent tasks (such as complex ethical judgments).

Taking medicine as an example, the World Health Organization has reported that the average misdiagnosis rate in clinical medicine is 30%, with 80% of medical errors caused by thinking and cognitive mistakes. According to the “China Rare Disease Comprehensive Social Survey” 2020-2021 data, the average time to diagnose a rare disease in China is 4.26 years, with a misdiagnosis rate as high as 42%. Medicine is a microcosm of human hallucination.

The Root Causes of Human Hallucinations

From a macro perspective, human cognitive biases and misunderstandings are far more severe than those of large language models. This is actually related to how our human brains process information, cognitive biases, and the influence of external environments, and is an inevitable result of our biological limitations.

The causes of human hallucinations are much more numerous than the root causes of AI hallucinations.

First, humans tend to seek out, interpret, and remember information that supports their existing beliefs while ignoring or undervaluing contradictory information. Humans rely on easily recalled information to judge the likelihood or frequency of events, which may lead to misjudgments of event probabilities. When making decisions, we overly depend on initially obtained information (anchoring effect), even if subsequent information might be more important. The Titanic was considered an “unsinkable” ship, and crew and management did not give sufficient attention to iceberg warnings, unfortunately hitting an iceberg and sinking on its maiden voyage, resulting in the loss of over 1,500 lives.

Second, when faced with large amounts of information, humans may struggle to effectively process and filter it, leading to information misunderstanding or misjudgment. In 1986, operators at the former Soviet Union’s Chernobyl nuclear power plant ignored multiple safety protocols and warning signals during a safety test, resulting in one of history’s worst nuclear power plant accidents, causing massive radiation leakage. Since the accident, Pripyat and Chernobyl have been described as “ghost towns,” with an area of about 2,000 square kilometers becoming nearly uninhabited.

Additionally, humans’ frequently fluctuating emotional states and personal motivations affect information processing and decision-making. For example, anxiety may lead to overestimation of risks, while optimism may lead to underestimation. In 2003, the United States and its allies, based on incorrect intelligence judgments that Iraq possessed weapons of mass destruction, launched a military invasion of Iraq, resulting in long-term regional instability and numerous casualties, ultimately finding no weapons of mass destruction.

Historical Examples of Human Hallucinations

Even individuals with enormous power and influence may cause serious consequences due to cognitive biases, misjudgments, or ignoring warnings. European witch hunts, Nazi massacres, and U.S. President Trump’s recent tariff war are typical examples of crises triggered by human hallucinations.

In a speech, Trump stated: “For years, as other countries grew rich and strong, hardworking American citizens were forced to stand by, much of it at our expense… Now it’s our turn to prosper.” Such remarks prompted even America’s staunch ally, the longstanding British magazine “The Economist,” to criticize: “He [Trump] conveniently ignores two facts: globalization has brought unprecedented prosperity to America, and America has been the main architect of the rules supporting international trade. Now, if Trump gets his way, the economic order that has been slowly and steadily built since World War II will be buried. Instead, Trump praises America’s prosperity in the late 19th century, when America was much poorer than today.”

If even a U.S. president with high position and authority cannot escape from self-cognitive bias, how much worse must be the hallucination level of ordinary people with greater information gaps?

As neuroscientist Damasio said: “Humans are not thinking machines that can feel, but feeling machines that can think.” This sentence may be tongue-twisting, but if you look at it a few more times, you’ll appreciate its subtlety.

References:

1. For Google’s detailed analysis of AI hallucinations, see https://cloud.google.com/discover/what-are-ai-hallucinations?hl=zh-CN

2. You can view Vectara’s latest evaluation here https://github.com/vectara/hallucination-leaderboard