Sensor Fusion: Enhancing Navigation and Safety for Autonomous Mobile Robots

The concept behind Industry 5.0 envisions humans working alongside AI-powered robots that support rather than replace human workers. Before this vision becomes reality, Autonomous Mobile Robots (AMRs) must overcome several challenges, with sensor fusion being a key enabling technology.

Key Challenges in AMR Adoption

The primary challenge for AMR adoption is the diversity of applications and environments in which they operate. AMRs are already being deployed in warehouses, agricultural technology, commercial landscaping, healthcare, smart retail, security and surveillance, delivery, inventory management, and picking and sorting operations. In all these diverse settings, AMRs must operate safely alongside humans.

AMRs also face situational complexities that humans take for granted. For instance, consider a delivery robot encountering a ball in its path. While it might easily identify and avoid the ball, would it anticipate a child running to retrieve it? Other complex scenarios include:

- Using mirrors to see around corners

- Recognizing unsafe surfaces like freshly poured concrete

- Identifying edges, cliffs, ramps, and stairs

- Responding to unexpected scenarios like vandalism

- Navigating through crowded areas with unpredictable human movement

- Adapting to changing weather conditions affecting sensor performance

- Managing reflective surfaces that can confuse optical sensors

These challenges become even more pronounced in dynamic environments such as construction sites, busy warehouses, or outdoor settings where lighting and weather conditions constantly change. Traditional programming approaches fail to address these complexities, necessitating advanced sensor systems and AI integration.

High-Performance Sensors for AMRs: Detailed Analysis

Various sensor types are essential for simultaneous localization and mapping (SLAM) and providing distance and depth measurements. The industry has seen significant advancements in each sensor category, with improvements in miniaturization, power efficiency, and processing capabilities.

CMOS Imaging Sensors

Modern CMOS imaging sensors have evolved dramatically in recent years, offering:

- Resolution options from VGA to 4K and beyond: Higher resolutions enable finer detail recognition at the cost of increased data processing requirements

- Global shutter vs. rolling shutter technologies: Global shutter prevents motion distortion critical for fast-moving robots

- HDR (High Dynamic Range) capabilities: Latest sensors offer 140dB+ dynamic range, allowing for operation in challenging lighting conditions from bright sunlight to near darkness

- AI acceleration on-chip: New generations include neural network accelerators for edge processing

- Power efficiency improvements: Low-power modes that can wake on detected motion save significant battery life in mobile applications

Industry leaders have reduced pixel size to 0.7μm while improving quantum efficiency, allowing for smaller cameras with better performance. Developments in stacked CMOS technology enable faster readout speeds and reduced motion artifacts.

Time-of-Flight (ToF) Technologies

Both direct time-of-flight (dToF) and indirect time-of-flight (iToF) technologies have seen significant advancements:

- dToF: Latest single-photon avalanche diode (SPAD) arrays can achieve millimeter precision at ranges up to 100 meters in optimal conditions

- iToF: Modern sensors achieve sub-centimeter accuracy at ranges up to 5 meters with significantly lower power consumption than dToF

- Miniaturization: Module sizes have decreased by 70% in the past five years while improving performance

- Multi-zone capabilities: Advanced ToF sensors can now process multiple depth zones simultaneously

- Ambient light rejection: Improved filtering techniques allow operation in outdoor environments

- Power consumption: New generations operate at sub-500mW levels for continuous sensing

The industry trend shows increasing integration of ToF sensors with RGB cameras for combined color and depth sensing in compact packages, with costs decreasing due to automotive industry adoption driving volume production.

Radar Technology

Radar technology for AMRs has benefitted from automotive industry developments:

- mmWave radar (60GHz-79GHz): Offers penetration through dust, smoke, and light precipitation

- Resolution improvements: Modern systems achieve angular resolution below 1° with advanced antenna arrays

- Size reduction: Typical modules have shrunk by 60% in five years

- Range capabilities: Current systems reliably detect large obstacles at 200+ meters and smaller obstacles at 50+ meters

- Doppler processing: Advanced velocity detection can identify and track multiple moving objects simultaneously

- Power efficiency: Operating power has decreased to 1-2W for continuous operation

- Cost trajectory: Prices have dropped 40% in three years due to automotive mass production

Recent developments include software-defined radar systems that can reconfigure detection parameters on-the-fly based on environmental conditions and task requirements.

Ultrasonic Sensors

While a mature technology, ultrasonic sensors continue to evolve:

- Range improvements: Advanced models achieve up to 10 meters range (up from traditional 3-5 meters)

- Miniaturization: New MEMS-based ultrasonic transducers are 75% smaller than conventional designs

- Multi-frequency operation: Allows better material differentiation and improved object classification

- Improved interference rejection: Modern designs can operate multiple sensors in close proximity without cross-talk

- Weather resistance: Latest models maintain performance in rain, fog, and temperature extremes

- Energy efficiency: Power consumption has decreased to micro-watts in standby and milliwatts in active modes

Ultrasonic technology remains valuable for short-range detection in adverse conditions where optical sensors might fail, particularly for detecting transparent obstacles like glass.

Inductive Positioning Systems

Inductive sensing offers unique capabilities for AMRs:

- High precision: Modern systems achieve sub-millimeter positional accuracy

- Immunity to environmental factors: Performance unaffected by dust, moisture, or lighting conditions

- Longevity: No mechanical wear ensures consistent performance over millions of cycles

- Low power requirements: Typical consumption under 100mW for continuous operation

- Cost-effectiveness: Simpler designs compared to optical systems reduce manufacturing costs

Recent innovations include grid-based positioning systems that can guide AMRs along predefined paths with extreme precision, ideal for manufacturing environments.

Bluetooth® Low Energy Positioning

BLE technology has evolved beyond simple connectivity:

- Direction Finding capabilities: Angle of Arrival (AoA) and Angle of Departure (AoD) technologies achieve location accuracy of 10cm in controlled environments

- Ultra-Wideband integration: Hybrid BLE/UWB systems improve precision to 5-10cm

- Mesh networking: Modern implementations support hundreds of nodes for facility-wide positioning

- Battery life: Latest modules operate for years on coin cell batteries

- Miniaturization: Complete modules now measure less than 1cm² including antennas

- Infrastructure leverage: Utilizing existing BLE beacons reduces deployment costs

Industry developments include AI-enhanced signal processing that improves positioning accuracy in multipath environments common in industrial settings.

Inertial Measurement Units (IMUs)

Modern IMUs have transformed from simple motion detectors to sophisticated navigation aids:

- MEMS technology advancement: Latest 9-axis units (accelerometer, gyroscope, magnetometer) achieve bias stability below 5°/hour

- Sensor fusion on-chip: Integrated processing reduces host system computational load

- Temperature compensation: Advanced algorithms maintain accuracy across industrial temperature ranges

- Size reduction: Complete IMUs now measure less than 3×3×1mm

- Power optimization: Operation at sub-1mA during continuous sensing

- Cost reduction: Prices have fallen by 60% in five years while performance has improved

New developments include machine learning-based calibration that continuously adapts to sensor drift and environmental conditions.

Sensor Performance Comparison for AMR Applications

| Sensor Type | Range | Resolution | Object Classification | Weather Resistance | Power Consumption | Size | Relative Cost | Strengths | Limitations |

| CMOS Imaging | 0-100m | Very High | Excellent | Poor-Moderate | Moderate | Small | Low-Moderate | Superior object recognition, color detection | Struggles in low light, affected by direct sunlight |

| dToF LiDAR | 0-200m | High | Moderate | Moderate | High | Medium-Large | Moderate-High | Precise distance mapping, works in varied lighting | Performance degrades in adverse weather, reflective surfaces cause issues |

| iToF | 0-5m | High | Poor | Poor | Low | Small | Low | Compact, low power, good for close obstacles | Limited range, affected by ambient light |

| Radar | 0-300m | Low-Moderate | Poor-Moderate | Excellent | Moderate | Medium | High | Works in all weather, detects through some materials | Poor fine detail recognition, limited resolution |

| Ultrasonic | 0-10m | Low | Poor | Good | Very Low | Small | Very Low | Works in darkness, fog, detects transparent objects | Slow response, limited range and resolution |

| Inductive | 0-2cm | Very High | None | Excellent | Very Low | Small | Low | Precise positioning, unaffected by environment | Extremely limited range, requires infrastructure |

| BLE | 0-50m | Low | None | Good | Very Low | Very Small | Very Low | Low power, leverages infrastructure | Requires beacons, limited precision |

| IMU | N/A | Moderate | None | Excellent | Low | Very Small | Low | Works anywhere, no external references needed | Drift over time, requires calibration |

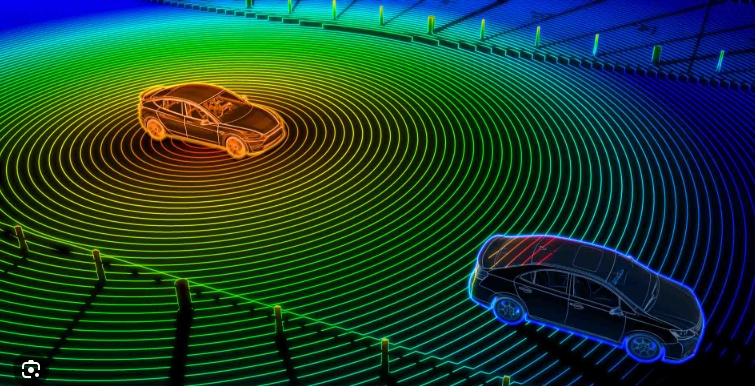

This comparison demonstrates why sensor fusion is essential—no single sensor provides optimal performance across all conditions and requirements.

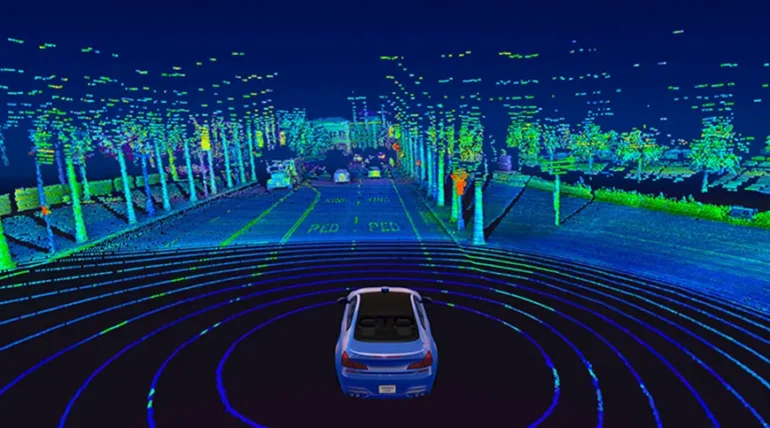

Advanced Sensor Fusion Architectures for AMRs

Sensor fusion has evolved from simple data combining to sophisticated architectures that maximize the strengths of each sensor type while compensating for individual weaknesses.

Fusion Levels and Methodologies

Modern sensor fusion systems operate at multiple levels:

- Low-level (Raw Data) Fusion: Combines unprocessed data directly from sensors

- Requires high bandwidth but preserves all information

- Typically implemented on dedicated hardware (FPGAs or ASICs)

- Example: Combining raw point clouds from multiple LiDARs for improved resolution

Feature-level Fusion:

- Extracts features from each sensor before combining

- Reduces processing requirements while maintaining critical information

- Often implemented on specialized vision processing units (VPUs)

- Example: Combining edge features from cameras with distance data from radar

Decision-level Fusion:

- Each sensor system makes independent decisions that are then combined

- Most efficient in terms of communication bandwidth

- Suitable for distributed processing architectures

- Example: Separate object detection systems voting on final classification

State-of-the-Art Fusion Algorithms

The industry has developed sophisticated approaches to sensor fusion:

- Kalman Filter Variants: Extended Kalman Filters (EKF) and Unscented Kalman Filters (UKF) remain foundational but are being enhanced with adaptive parameters

- Particle Filters: For non-Gaussian noise environments and highly non-linear systems

- Deep Learning Approaches: Multi-modal neural networks that process different sensor inputs simultaneously

- Cross-attention mechanisms that learn optimal fusion weights dynamically

- Graph neural networks for spatial-temporal fusion of sensor data

Bayesian Methods: Probabilistic fusion frameworks that explicitly model uncertainty Federated Fusion: Distributed approaches that combine results from edge processors

How Sensor Fusion Enables Autonomous Mobile Robots in Practice

Sensor fusion combines data from multiple sources to generate a more comprehensive understanding of the system and its surroundings. This process is essential for AMRs as it provides enhanced reliability, redundancy, and safety. Here are some practical examples of how sensor fusion is implemented in modern AMRs:

Case Study: Warehouse Navigation

In warehouse environments, AMRs typically employ:

- Cameras for barcode reading and obstacle classification

- LiDAR for primary navigation and obstacle detection

- Ultrasonic sensors for close-range detection of transparent or reflective objects

- Inertial measurement for tracking during high-speed movement

The fusion process allows the robot to:

- Create detailed 3D maps with semantic understanding (identifying shelves, products, people)

- Navigate safely even when one sensor is temporarily blinded (e.g., bright light affecting cameras)

- Adapt to changing warehouse layouts without requiring complete reprogramming

- Achieve positioning accuracy within 1-2cm even in GPS-denied environments

Case Study: Outdoor Agricultural Robots

For agricultural applications, robots typically combine:

- Global Navigation Satellite System (GNSS) for broad positioning

- Cameras with specialized filters for crop identification and health monitoring

- LiDAR for terrain mapping and obstacle detection

- Radar for operation in dusty or foggy conditions

- Soil sensors for targeted treatment

This fusion enables:

- Centimeter-level precision in planting and harvesting

- All-weather operation capability

- Identification of plant diseases or pest infestations

- Safe operation around livestock and farm workers

- Reduced chemical use through targeted application

Benefits of Advanced Sensor Fusion

Today’s sophisticated fusion systems deliver several key advantages:

- Enhanced Perception Reliability: By combining complementary sensors, the system maintains performance even when individual sensors face challenging conditions

- Reduced Latency: Parallel processing of sensor data with predictive algorithms minimizes response time

- Extended Operational Range: Different sensors optimal at different distances create seamless perception from immediate proximity to long-range

- Improved Classification Accuracy: Multi-modal data significantly improves object recognition compared to single-sensor approaches

- Energy Efficiency: Context-aware sensor management activates only the necessary sensors for current conditions

- Sensor Self-Monitoring: Cross-validation between sensors identifies when a particular sensor is malfunctioning

Emerging Trends in Commercial Sensor Fusion Systems

Several significant trends are reshaping sensor fusion technology:

- AI-Optimized Sensor Arrays: Neural architecture search techniques automatically determine optimal sensor configurations for specific applications

- Adaptive Fusion Weights: Real-time adjustment of how much each sensor contributes based on current reliability and environmental conditions

- Edge-Cloud Hybrid Processing: Time-critical fusion occurs on-device while complex analysis leverages cloud resources

- Cooperative Perception Networks: Multiple AMRs share sensory data to extend perception beyond individual robot capabilities

- Human-Robot Collaborative Sensing: Integrating wearable sensors on human workers to improve robot awareness in shared workspaces

- Semantic Fusion: Moving beyond geometric fusion to include contextual understanding and prediction capabilities

- Digital Twin Integration: Sensor fusion systems that update real-time digital models for simulation and predictive analysis

Industry Applications and Implementation Challenges

Current Commercial Deployments

Sensor fusion is already enabling commercial AMR applications across multiple industries:

- Manufacturing: AMRs with fusion-based navigation systems achieve 99.9% uptime in 24/7 operations, delivering components to production lines with precision timing

- Retail: Inventory robots combining RFID and vision systems scan shelves at rates of 30,000+ items per hour with 99% accuracy

- Healthcare: Hospital delivery robots navigate complex environments and integrate with elevator and door systems using multi-sensor fusion

- Construction: Autonomous equipment performs site surveys combining aerial imagery with ground-based LiDAR for complete digital twins

- Last-mile Delivery: Sidewalk robots navigate urban environments using camera-LiDAR fusion augmented with geo-referenced data

Implementation Challenges

Despite significant progress, several challenges remain in implementing effective sensor fusion:

- Calibration Complexity: Maintaining precise spatial and temporal alignment between multiple sensors requires sophisticated calibration procedures

- Processing Requirements: Advanced fusion algorithms demand significant computational resources, creating power and thermal management challenges

- Failure Mode Analysis: Understanding how fusion systems degrade when individual sensors fail remains difficult

- Verification and Validation: Testing fusion systems across all possible environmental conditions is practically impossible

- Standards Development: The industry lacks standardized evaluation metrics for fusion performance

- Cost Optimization: Balancing system capabilities with affordable designs for volume production

Comprehensive Sensor Ecosystem for AMRs

Imaging Solutions

- Global Shutter Sensors: The AR0234CS 2.3MP CMOS global shutter sensor offers industry-leading 120dB dynamic range with specialized automotive qualification

- Rolling Shutter Sensors: The AR0821CS 8.3MP sensor balances resolution and sensitivity for long-range detection

- Specialized Features: On-chip HDR processing, LED flicker mitigation, and advanced defective pixel correction

- Vision Processing: RSL10 Vision Development Kit integrates ultralow-power image capture with AI processing at the edge

LiDAR and ToF Solutions

- Silicon Photomultipliers (SiPMs): The RB Series provides industry-leading photon detection efficiency with thermal stability

- SPAD Arrays: Advanced time-of-flight sensors achieve millimeter precision with integrated signal processing

- Driver and Receiver Circuits: Complete analog front-end solutions optimized for ToF applications

- Synchronization Solutions: Precision timing circuits ensuring accurate multi-sensor data alignment

Ultrasonic and Inductive Sensing

- Ultrasonic Transducer Drivers: Specialized amplifiers delivering efficient driving of ultrasonic elements

- Signal Conditioning: Advanced filtering and amplification for reliable detection in noisy environments

- Inductive Position Sensors: High-precision position detection for robotics joints and proximity detection

Connectivity and Positioning

- Bluetooth® LE SoCs: The RSL10 family offers ultra-low-power wireless capabilities with direction-finding support

- Sensor Hubs: Dedicated processors for sensor fusion with hardware acceleration for common algorithms

- Power Management: Specialized PMICs designed for the unique requirements of sensor arrays with multiple supply domains

Development and Integration Support

- Modular Hardware Platforms: Evaluation kits and reference designs across sensor technologies

- Fusion Software Libraries: Optimized algorithms for common sensor combinations

- Simulation Tools: Digital twins of sensors for virtual testing before hardware implementation

- System Solution Guides: Application-specific integration approaches for different AMR use cases

Future Directions in AMR Sensor Fusion

Looking ahead, several emerging technologies are poised to transform AMR sensor capabilities:

- Event-based Sensors: Neuromorphic vision sensors that detect changes rather than capturing frames, dramatically reducing data and power requirements

- Quantum Sensors: Next-generation sensors leveraging quantum effects for unprecedented sensitivity

- Soft Robotics Integration: Tactile and pressure sensor arrays that enable robots to interact more safely with humans

- Biological-inspired Sensing: Sensor designs mimicking animal sensory systems, such as whisker-inspired tactile arrays

- Self-healing Sensors: Systems that can automatically recalibrate or compensate for sensor degradation over time

- Transparent Sensors: Optical sensors integrated directly into surfaces without visibility

- Energy-harvesting Sensor Nodes: Self-powered sensors that eliminate battery maintenance

Summary

Autonomous mobile robots are finding increasingly diverse applications, with adoption accelerating across industries. The complexity of environments and use cases demands sophisticated sensing solutions that no single sensor technology can provide. Sensor fusion has emerged as the critical enabling technology for AMRs, combining diverse sensor modalities to create systems that are more capable, reliable, and safe than any individual component.

Best practices for successful implementation include:

- Controlling the environmentto reduce potential collision risks, such as establishing designated routes for AMRs in manufacturing or warehouse facilities

- Simulating exact use cases(including edge cases) using digital twins during development

- Incorporating multi-level sensor fusionwith intelligent sensors, algorithms, and models optimized for specific application requirements

- Implementing adaptive systemsthat can reconfigure sensing strategies based on environmental conditions

- Designing for degraded operationensuring safe function even when some sensors are compromised

As Industry 5.0 continues to evolve, the collaboration between humans and robots will become increasingly seamless, enabled by ever more sophisticated sensor fusion systems that allow AMRs to perceive, understand, and navigate complex environments with human-like awareness.