Introduction to AMD ROCm: The Open-Source Software Stack for GPU Computing

Keywords Overview:

- Primary Keywords: AMD ROCm, ROCm software, AMD Instinct MI300

- Secondary Keywords: high-performance computing, AI software stack, GPU programming, open-source software, machine learning frameworks

Introduction to AMD ROCm

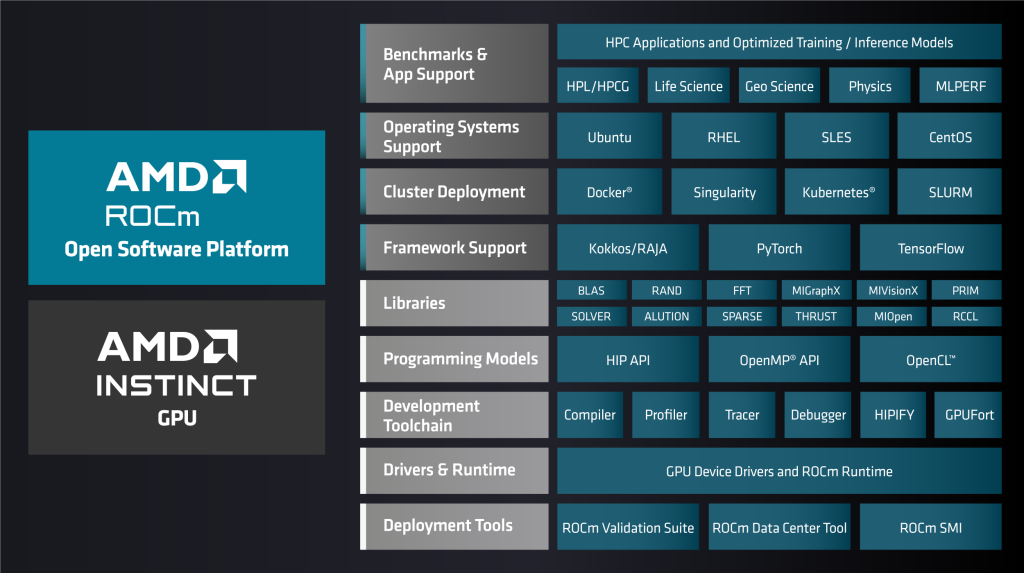

AMD ROCm is an open-source software stack designed for developing artificial intelligence (AI) and high-performance computing (HPC) solutions on AMD GPUs. This comprehensive platform consists of programming models, tools, compilers, libraries, and runtime components that enable developers to harness the full potential of AMD’s GPU technology.

As organizations increasingly rely on GPU acceleration for complex computational tasks, AMD ROCm provides a robust foundation for building and deploying cutting-edge applications across various domains. Whether you’re developing machine learning models, simulating physical phenomena, or processing massive datasets, ROCm software offers the tools and performance needed to tackle these challenges efficiently.

Unleash Your Workloads with the ROCm 6 Platform

The ROCm 6 platform represents a significant advancement in AMD’s open-source software stack, delivering optimized performance for both HPC and AI workloads. This latest iteration provides developers with a comprehensive set of capabilities that enhance productivity and computational efficiency.

ROCm software supports detailed features such as low-level kernel programming, enabling developers to fine-tune their applications for maximum performance. One of the key advantages of AMD ROCm is its portability across various GPU devices, allowing code to run seamlessly on different hardware configurations without extensive modifications.

The platform’s commitment to open-source technologies facilitates collaboration and deployment, making it an attractive option for organizations seeking to avoid vendor lock-in. By leveraging free and open-source software, developers can build sustainable solutions that evolve with changing requirements and technological advancements.

Accelerate Your AI Applications

AMD ROCm provides robust support for popular AI and machine learning frameworks, making it easier for developers to build and deploy sophisticated models. The platform seamlessly integrates with TensorFlow, JAX, and PyTorch, allowing data scientists and AI researchers to leverage familiar tools while benefiting from AMD’s GPU acceleration.

In addition to these mainstream frameworks, ROCm software supports specialized libraries such as DeepSpeed, ONNX-RT, Jax, and CuPy. These components enhance model development and runtime performance, enabling more efficient training and inference processes for complex AI systems.

Perhaps most impressively, the latest version delivers up to 8× performance improvement relative to previous generations. This dramatic speed increase allows organizations to train larger models, process more data, and generate insights faster than ever before. For AI practitioners working with resource-intensive applications like large language models or computer vision systems, these performance gains translate to significant competitive advantages.

Enhance Your High-Performance Computing Workloads

High-performance computing applications span numerous scientific and engineering domains, from astrophysics and climate modeling to computational chemistry and fluid dynamics. AMD ROCm provides essential support for these computationally intensive workloads, enabling researchers and engineers to solve complex problems more efficiently.

The platform’s compatibility with a wide range of HPC applications ensures that scientists can focus on their research rather than software integration challenges. Additionally, AMD Infinity Hub offers access to numerous GPU-accelerated applications via containers, simplifying deployment and management of specialized software stacks.

By leveraging AMD ROCm for high-performance computing, organizations can accelerate simulations, analyze larger datasets, and generate more accurate models. This capability is particularly valuable in fields where computational resources have traditionally limited the scope and scale of research projects.

New Features in ROCm 6

ROCm 6 introduces several exciting enhancements that expand its capabilities and improve performance across various use cases:

Extended Support

ROCm 6 extends support for AMD Instinct MI300A and MI300X accelerators, enabling developers to harness the power of AMD’s latest GPU technology. These advanced accelerators deliver exceptional performance for AI and HPC workloads, making them ideal for demanding applications that require massive computational resources.

Key AI Features

The platform includes several AI-focused enhancements, such as the ROCm converter engine, which facilitates model conversion and optimization. Additionally, optimized attention algorithms improve performance for transformer-based models, while collective communication libraries enhance distributed training capabilities.

Performance Optimization

ROCm 6 advances the utilization of various precision formats, including FP16, BF16, and FP8, allowing developers to balance accuracy and performance based on application requirements. The release also includes HIPGraph optimization, which improves graph processing performance for network analysis and other graph-based applications.

Developer Support

To facilitate the development of efficient AI models, ROCm 6 incorporates structured sparsity and quantization libraries. These tools enable developers to reduce model size and improve inference speed without significant accuracy loss, making it easier to deploy sophisticated AI systems in resource-constrained environments.

Expanded Ecosystem Support

The latest version integrates with the newest frameworks, models, and machine learning pipelines, ensuring compatibility with the rapidly evolving AI landscape. This expanded ecosystem support allows developers to adopt cutting-edge techniques and methodologies while benefiting from AMD’s GPU acceleration.

Industry Validation

Greg Diamos, Chief Technology Officer at Lamini, highlights that ROCm achieves software parity with CUDA for Large Language Models (LLMs). This endorsement underscores the platform’s maturity and capability to support the most demanding AI workloads, providing a viable alternative to established GPU computing ecosystems.

The achievement of software parity represents a significant milestone for AMD ROCm, as it demonstrates the platform’s ability to compete with industry-standard solutions in terms of functionality, performance, and developer experience. For organizations looking to diversify their GPU computing infrastructure or optimize costs, this parity offers compelling reasons to consider AMD’s technology stack.

To summarize

AMD ROCm provides a comprehensive, open-source software stack that empowers developers to create high-performance applications for AI, scientific computing, and other computationally intensive domains. With its support for popular frameworks, advanced optimization capabilities, and commitment to open standards, ROCm software offers a compelling platform for organizations seeking to leverage GPU acceleration.

As the AI and HPC landscapes continue to evolve, AMD ROCm remains at the forefront of innovation, delivering the tools and performance needed to tackle tomorrow’s computational challenges. Whether you’re developing cutting-edge AI models or simulating complex physical phenomena, ROCm provides the foundation for success in an increasingly GPU-accelerated world.

Introduction to AMD ROCm: The Open-Source Software Stack for GPU Computing

Keywords Overview:

- Primary Keywords: AMD ROCm, ROCm software, AMD Instinct MI300

- Secondary Keywords: high-performance computing, AI software stack, GPU programming, open-source software, machine learning frameworks

Introduction to AMD ROCm

AMD ROCm is an open-source software stack designed for developing artificial intelligence (AI) and high-performance computing (HPC) solutions on AMD GPUs. This comprehensive platform consists of programming models, tools, compilers, libraries, and runtime components that enable developers to harness the full potential of AMD’s GPU technology.

As organizations increasingly rely on GPU acceleration for complex computational tasks, AMD ROCm provides a robust foundation for building and deploying cutting-edge applications across various domains. Whether you’re developing machine learning models, simulating physical phenomena, or processing massive datasets, ROCm software offers the tools and performance needed to tackle these challenges efficiently.

Unleash Your Workloads with the ROCm 6 Platform

The ROCm 6 platform represents a significant advancement in AMD’s open-source software stack, delivering optimized performance for both HPC and AI workloads. This latest iteration provides developers with a comprehensive set of capabilities that enhance productivity and computational efficiency.

ROCm software supports detailed features such as low-level kernel programming, enabling developers to fine-tune their applications for maximum performance. One of the key advantages of AMD ROCm is its portability across various GPU devices, allowing code to run seamlessly on different hardware configurations without extensive modifications.

The platform’s commitment to open-source technologies facilitates collaboration and deployment, making it an attractive option for organizations seeking to avoid vendor lock-in. By leveraging free and open-source software, developers can build sustainable solutions that evolve with changing requirements and technological advancements.

Accelerate Your AI Applications

AMD ROCm provides robust support for popular AI and machine learning frameworks, making it easier for developers to build and deploy sophisticated models. The platform seamlessly integrates with TensorFlow, JAX, and PyTorch, allowing data scientists and AI researchers to leverage familiar tools while benefiting from AMD’s GPU acceleration.

In addition to these mainstream frameworks, ROCm software supports specialized libraries such as DeepSpeed, ONNX-RT, Jax, and CuPy. These components enhance model development and runtime performance, enabling more efficient training and inference processes for complex AI systems.

Perhaps most impressively, the latest version delivers up to 8× performance improvement relative to previous generations. This dramatic speed increase allows organizations to train larger models, process more data, and generate insights faster than ever before. For AI practitioners working with resource-intensive applications like large language models or computer vision systems, these performance gains translate to significant competitive advantages.

Enhance Your High-Performance Computing Workloads

High-performance computing applications span numerous scientific and engineering domains, from astrophysics and climate modeling to computational chemistry and fluid dynamics. AMD ROCm provides essential support for these computationally intensive workloads, enabling researchers and engineers to solve complex problems more efficiently.

The platform’s compatibility with a wide range of HPC applications ensures that scientists can focus on their research rather than software integration challenges. Additionally, AMD Infinity Hub offers access to numerous GPU-accelerated applications via containers, simplifying deployment and management of specialized software stacks.

By leveraging AMD ROCm for high-performance computing, organizations can accelerate simulations, analyze larger datasets, and generate more accurate models. This capability is particularly valuable in fields where computational resources have traditionally limited the scope and scale of research projects.

New Features in ROCm 6

ROCm 6 introduces several exciting enhancements that expand its capabilities and improve performance across various use cases:

Extended Support

ROCm 6 extends support for AMD Instinct MI300A and MI300X accelerators, enabling developers to harness the power of AMD’s latest GPU technology. These advanced accelerators deliver exceptional performance for AI and HPC workloads, making them ideal for demanding applications that require massive computational resources.

Key AI Features

The platform includes several AI-focused enhancements, such as the ROCm converter engine, which facilitates model conversion and optimization. Additionally, optimized attention algorithms improve performance for transformer-based models, while collective communication libraries enhance distributed training capabilities.

Performance Optimization

ROCm 6 advances the utilization of various precision formats, including FP16, BF16, and FP8, allowing developers to balance accuracy and performance based on application requirements. The release also includes HIPGraph optimization, which improves graph processing performance for network analysis and other graph-based applications.

Developer Support

To facilitate the development of efficient AI models, ROCm 6 incorporates structured sparsity and quantization libraries. These tools enable developers to reduce model size and improve inference speed without significant accuracy loss, making it easier to deploy sophisticated AI systems in resource-constrained environments.

Expanded Ecosystem Support

The latest version integrates with the newest frameworks, models, and machine learning pipelines, ensuring compatibility with the rapidly evolving AI landscape. This expanded ecosystem support allows developers to adopt cutting-edge techniques and methodologies while benefiting from AMD’s GPU acceleration.

Industry Validation

Greg Diamos, Chief Technology Officer at Lamini, highlights that ROCm achieves software parity with CUDA for Large Language Models (LLMs). This endorsement underscores the platform’s maturity and capability to support the most demanding AI workloads, providing a viable alternative to established GPU computing ecosystems.

The achievement of software parity represents a significant milestone for AMD ROCm, as it demonstrates the platform’s ability to compete with industry-standard solutions in terms of functionality, performance, and developer experience. For organizations looking to diversify their GPU computing infrastructure or optimize costs, this parity offers compelling reasons to consider AMD’s technology stack.

Conclusion

AMD ROCm provides a comprehensive, open-source software stack that empowers developers to create high-performance applications for AI, scientific computing, and other computationally intensive domains. With its support for popular frameworks, advanced optimization capabilities, and commitment to open standards, ROCm software offers a compelling platform for organizations seeking to leverage GPU acceleration.

As the AI and HPC landscapes continue to evolve, AMD ROCm remains at the forefront of innovation, delivering the tools and performance needed to tackle tomorrow’s computational challenges. Whether you’re developing cutting-edge AI models or simulating complex physical phenomena, ROCm provides the foundation for success in an increasingly GPU-accelerated world.