Unlocking New Horizons in AI and HPC with AMD ROCm™ 6.3

Introduction to AMD ROCm 6.3: Advancing AI and High-Performance Computing

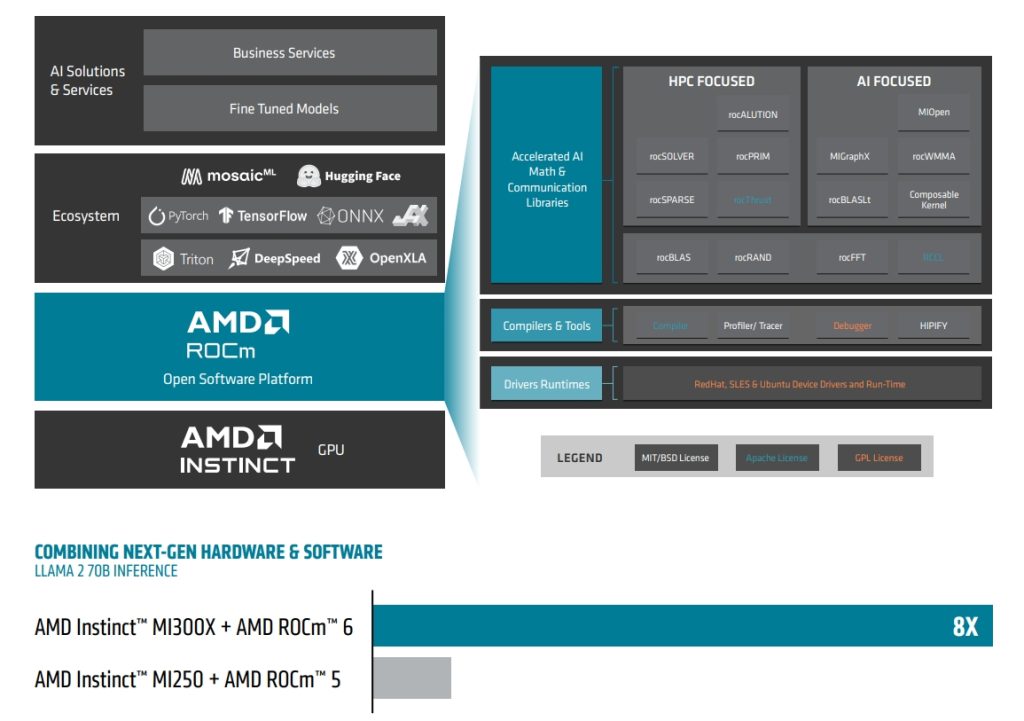

AMD ROCm 6.3 represents a significant milestone for AMD’s open-source platform, introducing advanced tools and optimizations to enhance AI, ML, and HPC workloads on AMD Instinct GPU accelerators. This release aims to improve developer productivity and support a wide range of customers, from innovative AI startups to HPC-driven industries.

This blog explores the outstanding features of this release, including seamless integration of SGLang for accelerated AI inference, redesigned FlashAttention-2 for optimized AI training and inference, introduction of multi-node Fast Fourier Transform (FFT) to transform HPC workflows, and much more.

Key Features of ROCm 6.3

SGLang: Ultra-Fast Inference for Generative AI Models

Generative AI is transforming industries, but deploying large models often presents challenges in latency, throughput, and resource utilization. SGLang is a new runtime supported in ROCm 6.3, specifically designed to optimize inference of cutting-edge generative models like LLMs and VLMs on AMD Instinct GPUs.

Why it matters:

- 6x Higher Throughput: Performance increased by 6x for LLM inference compared to existing systems, enabling businesses to serve AI applications at scale

- Ease of Use: Python-integrated and pre-configured in ROCm Docker containers, allowing developers to accelerate deployment of interactive AI assistants, multi-modal workflows, and scalable cloud backends while reducing setup time

Whether building customer-facing AI solutions or scaling AI workloads in the cloud, SGLang delivers the performance and usability needed to meet enterprise requirements.

Redesigned FlashAttention-2: Next-Level Transformer Optimization

Transformer models are at the core of modern AI, but their high memory and computational requirements traditionally limited scalability. With FlashAttention-2 optimized for ROCm 6.3, AMD addresses these pain points, enabling faster and more efficient training and inference.

Key benefits for developers:

- 3x Acceleration: Up to 3x speedup on backward passes and efficient forward passes compared to FlashAttention-1, accelerating model training and inference

- Extended Sequence Lengths: Efficient memory utilization and reduced I/O overhead make processing longer sequences seamless on AMD Instinct GPUs

AMD Fortran Compiler: Connecting Legacy Code with GPU Acceleration

With the introduction of the new AMD Fortran compiler in ROCm 6.3, businesses running legacy Fortran-based HPC applications can now unlock the power of modern GPU acceleration through AMD Instinct accelerators.

Primary advantages:

- Direct GPU Offloading: Leverage AMD Instinct GPUs and OpenMP offloading to accelerate critical scientific applications

- Backward Compatibility: Build on existing Fortran code while taking advantage of AMD’s next-generation GPU capabilities

- Simplified Integration: Interface seamlessly with HIP kernels and ROCm libraries, eliminating the need for complex code rewrites

Industries such as aerospace, pharmaceuticals, and weather modeling can now future-proof their legacy HPC applications, achieving GPU acceleration without extensive code refactoring.

Multi-Node FFT in rocFFT: Game Changer for HPC Workflows

Industries relying on HPC workloads—from oil and gas to climate modeling—require efficiently scalable distributed computing solutions. ROCm 6.3 introduces multi-node FFT support in rocFFT, enabling high-performance distributed FFT computations.

Why it’s crucial for HPC:

- Built-in Message Passing Interface (MPI) Integration: Simplifies multi-node scaling, helping reduce complexity for developers and accelerating enablement of distributed applications

- Leading Scalability: Scales seamlessly across massive datasets, optimizing performance for critical workloads like seismic imaging and climate modeling

Organizations in industries such as oil and gas and scientific research can now process larger datasets more efficiently, driving faster and more accurate decision-making.

Enhanced Computer Vision Libraries: AV1, rocJPEG, and More

AI developers working with modern media and datasets need efficient tools for preprocessing and augmentation. ROCm 6.3 enhances its computer vision libraries—rocDecode, rocJPEG, and rocAL—enabling businesses to handle a variety of workloads from video analytics to dataset augmentation.

Key improvements:

- AV1 Codec Support: Cost-effective, royalty-free modern media processing decoding through rocDecode and rocPyDecode

- GPU-Accelerated JPEG Decoding: Seamless processing of large-scale image preprocessing with built-in fallback mechanisms in the rocJPEG library

- Better Audio Augmentation: Improved preprocessing for robust model training in noisy environments using the rocAL library

From media and entertainment to autonomous systems, these capabilities empower developers to create better AI-powered solutions for real-world applications.

Why Choose ROCm 6.3?

AMD ROCm has made significant strides with each release, and version 6.3 is no exception. It offers cutting-edge tools to simplify development while delivering better performance and scalability for AI and HPC workloads.

By embracing the open-source spirit and continually evolving to meet developer needs, ROCm enables businesses to innovate faster, scale smarter, and stay ahead in competitive industries.

Ready to take the leap? Explore the full potential of ROCm and discover how AMD Instinct accelerators can power your business’s next major breakthrough.

The ROCm documentation center and other channels are being updated as we write this blog with the latest ROCm 6.3 content—stay tuned for more detailed information coming soon!

Visit the AMD ROCm blog for the latest developments, tips, and insights. Don’t forget to subscribe to the RSS feed to receive regular updates directly in your inbox.